Most SaaS applications can benefit significantly from implementing Large Language Models (LLMs) to improve your customer support experience. By utilizing LLMs, you can automate responses, personalize interactions, and profoundly enhance your support efficiency. This guide will walk you through actionable strategies to integrate this innovative technology into your customer support systems, helping you exceed customer expectations while reducing workload. Transform your approach to support by exploring A Guide to Using LLMs for Customer Support Solutions and unlocking the full potential of your SaaS application.

Key Takeaways:

- Integrate LLMs to automate responses for common customer queries, improving response times and allowing human agents to focus on complex issues.

- Utilize sentiment analysis capabilities of LLMs to gauge customer emotions and tailor responses, enhancing overall customer satisfaction.

- Continuously train and fine-tune LLMs with real customer interaction data to improve accuracy and relevance of responses over time.

Transforming Customer Interactions: The Role of LLMs in SaaS

Leveraging LLMs allows SaaS applications to redefine customer interactions by providing personalized, real-time support that meets your clients’ specific needs. These advanced models can analyze customer queries and respond in conversational language, ensuring a seamless experience. For insights on implementing these transformative capabilities in your business, check out How SaaS Businesses Can Leverage LLMs and Rapidly ….

Breaking Down Traditional Support Barriers

Traditional customer support often suffers from long wait times and inefficient responses. With LLMs, you gain the power to automate and streamline interactions, breaking down these barriers. Instant responses to common queries can enhance customer satisfaction, allowing your support team to focus on more complex issues. This leads to improved response times and ultimately, a better customer experience.

Enhancing Human-Machine Collaboration

Integrating LLMs into your customer support framework fosters a collaborative environment between human agents and AI. You can harness the strengths of both by using LLMs to handle routine inquiries while human agents tackle complex or sensitive situations. This partnership allows for an increase in overall efficiency, as your support team can respond more effectively to queries requiring empathy or nuanced understanding while AI takes care of the repetitive tasks.

Unleashing Automation: Streamlining Customer Queries with AI

Initiating automation in customer support transforms the way you handle queries, ensuring faster resolution times and enhanced user satisfaction. By incorporating large language models (LLMs), your SaaS application can leverage AI to manage repetitive questions, create insightful responses, and streamline interactions with users. Explore new strategies for improvement in customer support by checking out Level Up Your Support: 5 Game-Changing Ways LLMs & ….

Effective Chatbot Implementations for Instant Assistance

Chatbots are revolutionizing customer support by offering instant assistance around the clock. By implementing AI-driven chatbots, you can ensure immediate responses to common inquiries, alleviating the pressure on your support team while providing timely help to customers. These chatbots can efficiently handle high volumes of requests, allowing you to focus on more complex issues requiring human intervention.

Case Studies of Successful Automation in SaaS Environments

Automation in SaaS environments has shown remarkable success across various platforms. Noteworthy examples highlight how businesses have streamlined their customer support processes through AI. Here are some case studies illustrating this transformation:

- Company A: Achieved a 60% reduction in response time by implementing an AI chatbot, leading to a 30% increase in customer satisfaction scores.

- Company B: Managed to handle 80% of customer inquiries automatically with their LLM-based chatbot, freeing up human agents for more complex tasks.

- Company C: Increased support team efficiency by 50% post-automation, enabling the team to resolve customer issues faster and lead to a 20% rise in retention rates.

- Company D: Noticed a significant 40% decrease in operational costs related to customer support after implementing multi-lingual chatbots, expanding their reach in global markets.

These case studies exemplify the transformative potential of automation in customer support. By tapping into LLMs, you can not only improve response times but also enhance overall customer satisfaction. Each success story underscores the benefits of AI integration, showcasing remarkable improvements in efficiency, customer engagement, and operational savings. These metrics outline the impact of effective automation in your SaaS business strategy and highlight the necessity of evolving with technology.

Personalization at Scale: Tailoring Support with LLM Insights

Integrating LLM insights into customer support enables you to deliver tailored experiences at scale. By analyzing customer interactions, preferences, and behaviors, you can create responses that feel personalized, making your users feel valued and heard. The result is a proactive support system that anticipates needs and resolves issues efficiently, enhancing customer satisfaction and loyalty.

Leveraging Data to Anticipate Customer Needs

Utilizing customer data allows you to spot trends and identify common pain points before they escalate. By harnessing LLM capabilities, you can extract actionable insights from historical interactions, thus predicting future behaviors and tailoring your responses accordingly. This foresight empowers you to address inquiries even before they arise, creating a seamless customer journey.

Dynamic Response Generation for Enhanced User Experience

Dynamic response generation uses LLMs to formulate context-specific replies that resonate with individual customer interactions. This technology allows you to craft messages that adapt to the user’s tone, previous conversations, and unique challenges, making every interaction feel bespoke.

Imagine a scenario where a customer raises a recurring issue about billing discrepancies. Instead of sending a generic response, your system crafts a message that acknowledges their history, provides detailed information based on previous tickets, and offers actionable next steps. By applying LLM-driven dynamic responses, you not only enhance user experience but also foster a deeper connection with your customers, leading to long-term loyalty and trust. This approach turns ordinary troubleshooting into a personalized dialogue that addresses specific concerns while reinforcing your brand’s commitment to excellent customer service.

Measuring Success: Key Metrics for Evaluating LLM Impact

Assessing the effectiveness of large language models (LLMs) in customer support requires a closer look at specific metrics. These metrics serve as indicators, helping you to quantify the benefits gained from implementing LLMs within your SaaS application. By analyzing elements such as customer satisfaction, retention rates, response times, and resolution efficiency, you can identify areas for improvement and gauge the overall impact on your support operations.

Customer Satisfaction and Retention Rates

Customer satisfaction scores (CSAT) and retention rates are fundamental metrics for gauging the effectiveness of your customer support team enhanced by LLMs. Improving response accuracy and reducing resolution time positively influences customer perceptions, leading to higher satisfaction levels. For instance, companies that leverage advanced AI in their support have reported a 15% increase in CSAT scores and a corresponding 10% boost in customer retention, translating into long-term profitability.

Analyzing Response Times and Resolution Efficiency

Response time and resolution efficiency are key indicators of customer support effectiveness. The integration of LLMs can significantly decrease average response times from hours to seconds, allowing agents to prioritize complex queries. Companies using LLMs have observed up to a 50% reduction in resolution time, showcasing how automation accelerates issue handling, enhances workflow, and ultimately improves the customer experience.

Improved response times directly correlate with increased customer satisfaction, as users appreciate prompt attention to their issues. By analyzing the average time taken for initial responses and the overall duration to resolve issues, you gain insight into how LLMs streamline your support operations. For instance, a support team that reduced average response time by 4 hours through automation registered a notable increase in positive feedback, while also allowing human agents to focus on complex queries. This shift improves not only efficiency but also gives a sense of companionate care to customers who need more nuanced support.

Navigating Challenges: Common Pitfalls and Solutions in LLM Deployment

Implementing LLMs in customer support carries certain challenges that can impact the overall effectiveness of your SaaS application. By anticipating and addressing common pitfalls, such as integration difficulties, scalability issues, and performance inconsistencies, you can enhance user experience and streamline operations. Ensuring robust planning and continual adjustments as you deploy LLMs will facilitate a smoother integration process and maximize customer satisfaction.

Overcoming Data Privacy and Ethical Concerns

Data privacy remains a top priority as you deploy LLMs in your support strategy. Protecting sensitive customer information while utilizing AI tools involves adhering to stringent regulations and ethical guidelines. Implementing end-to-end encryption, anonymizing personal data, and educating your team on data handling policies will mitigate risks and reassure customers about their data safety.

Addressing User Frustrations and AI Limitations

Users often express frustration with AI’s limitations, which can affect their interaction with your support system. It’s imperative to acknowledge that LLMs might not always understand context or nuances in language, leading to potential misunderstandings. Establishing clear communication regarding AI capabilities helps create realistic user expectations. Additionally, providing easy access to human agents for complex queries can enhance overall support experience and mitigate dissatisfaction.

Fostering transparency about LLM limitations sets the groundwork for effective customer interactions. You might consider implementing a feedback loop where users can report unsatisfactory experiences directly related to AI responses. This data can guide future training iterations, refine the model, and enhance performance. Additionally, providing clear examples of how your AI assists customers can demystify the process, creating a sense of trust over time. Combining LLM capabilities with human oversight not only elevates customer service but also empowers users to feel more connected to the support they receive.

Conclusion

Taking this into account, leveraging LLMs can significantly enhance your customer support within SaaS applications. By integrating these advanced language models, you can improve response times, provide accurate solutions, and foster more engaging interactions with your users. Implementing automated chatbots, personalized content, and powerful analytics will not only streamline your support processes but also enhance customer satisfaction. Embracing LLM technology positions you to meet rising customer expectations and stand out in a competitive market.

Q: What are Large Language Models (LLMs) and how can they be applied in customer support for SaaS applications?

A: Large Language Models (LLMs) are advanced artificial intelligence systems that can understand and generate human-like text based on vast amounts of data. In the context of customer support for SaaS applications, LLMs can be utilized to automate responses to frequently asked questions, guide users through troubleshooting, and provide personalized assistance. By integrating LLMs into customer support workflows, SaaS companies can enhance efficiency, reduce response times, and improve user satisfaction while allowing human support agents to focus on more complex issues that require human empathy and judgment.

Q: What are some best practices for implementing LLMs in a SaaS customer support environment?

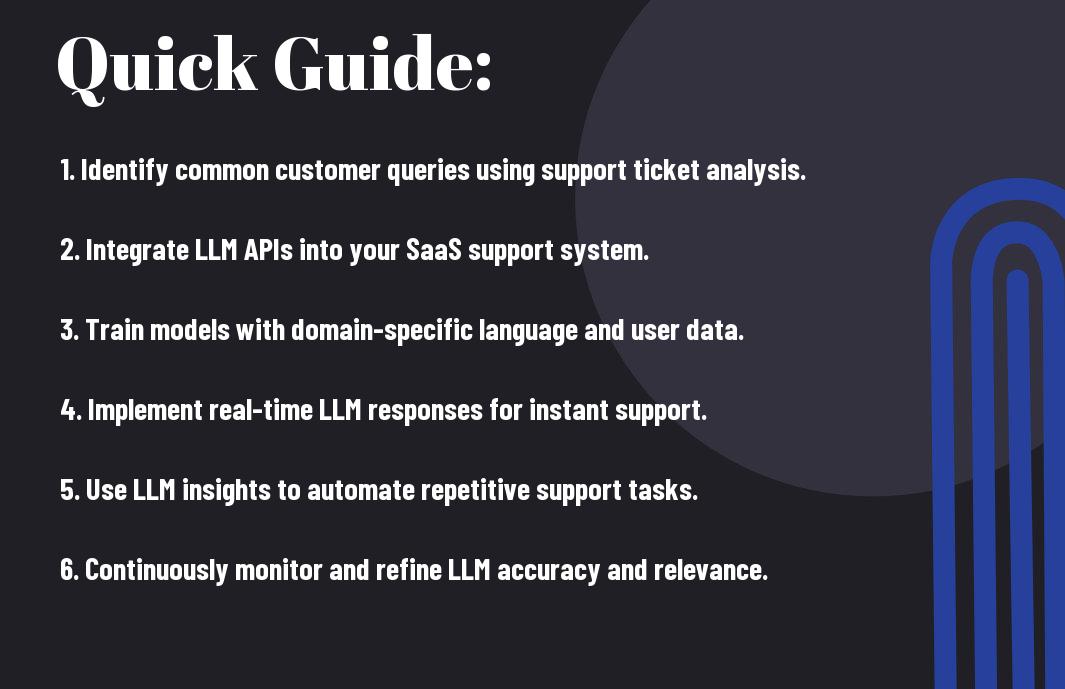

A: When implementing LLMs in a customer support environment, it is advisable to start with a well-defined strategy. First, identify common user queries and support scenarios that can be effectively addressed by the LLM. Training the model with relevant data from past customer interactions can improve accuracy. Regularly updating the model with new information and user feedback is vital for maintaining its effectiveness. An important practice is to ensure seamless handoffs to human agents for cases where the LLM cannot provide satisfactory answers. Additionally, monitoring performance metrics will help in refining the system continuously.

Q: How can LLMs improve the overall customer experience in SaaS applications?

A: LLMs enhance the customer experience in several ways. By providing immediate responses to queries, they drastically reduce waiting times for users seeking help. The personalized insights generated by LLMs can make support interactions feel more tailored to individual needs, which fosters a connection between the user and the brand. Furthermore, LLMs can gather insights from customer interactions, identifying recurring issues or trends that can be used to improve the product or service. By providing comprehensive and efficient support, LLMs contribute significantly to increasing user satisfaction and retention in SaaS applications.