With the rapid advancement of technology, Large Language Models (LLMs) have emerged as powerful tools that are revolutionizing various industries. From assisting in natural language processing to generating human-like text, LLMs have the potential to impact our daily lives in significant ways. In this beginner’s guide, we will explore the capabilities and applications of LLMs, as well as provide insights into how you can harness their power effectively.

Understanding the fundamentals of LLMs is necessary for anyone looking to stay ahead in the ever-evolving technological landscape. These models, such as GPT-3 and BERT, have the ability to process and generate text on a scale never seen before. However, with great power comes great responsibility, as ethical considerations surrounding the use of LLMs continue to be a topic of debate.

Whether you are a student, a professional, or simply someone curious about the potential of LLMs, this guide will provide you with the knowledge and tools needed to navigate this exciting field. By unlocking the power of LLMs, you can enhance your productivity, improve communication, and explore new possibilities in the world of artificial intelligence and language processing.

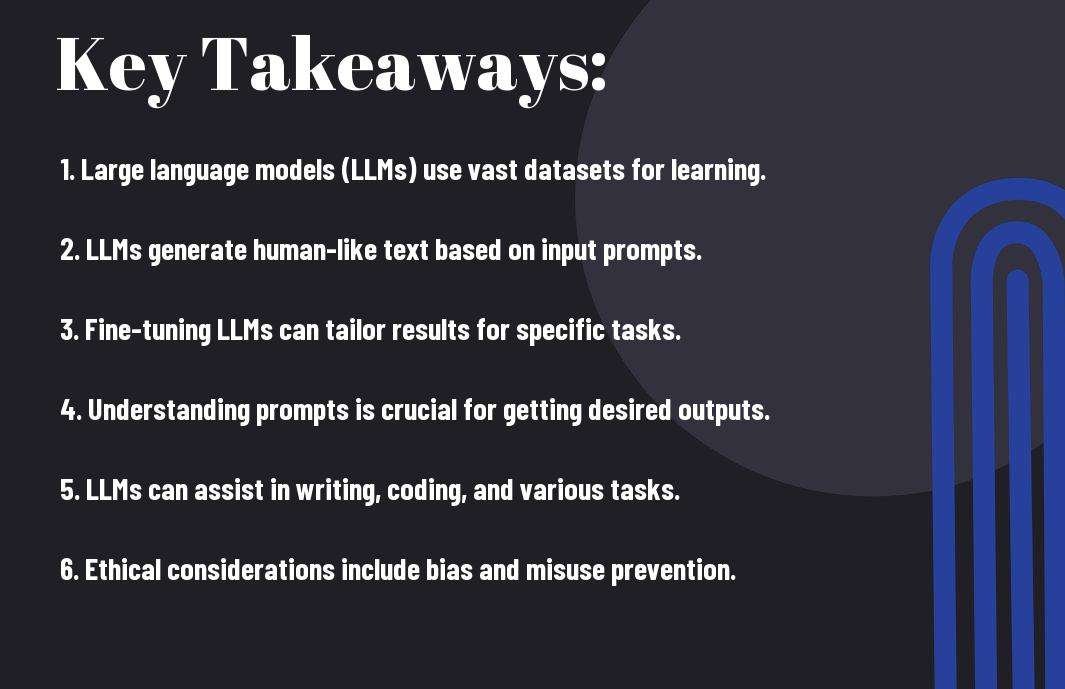

Key Takeaways:

- Large Language Models (LLMs) Basics: LLMs are a type of AI model that learns patterns and structures in language data to generate human-like text.

- Applications of LLMs: LLMs can be used in various fields such as natural language processing, chatbots, translation, summarization, and more.

- Training LLMs: Training LLMs requires massive amounts of text data and computational power to fine-tune the model for specific tasks.

- Challenges with LLMs: LLMs may exhibit biases, generate nonsensical content, or struggle with understanding context, leading to potential ethical concerns.

- Future of LLMs: Continued research and development in the field of LLMs aim to address current limitations and unlock even greater potential for these powerful language models.

Foundations of Language Models

Historical Context and Evolution of Language Models

While language models powered by large neural networks have gained widespread attention in recent years, it’s necessary to understand their historical context and evolution. Any discussion on language models traces back to the early days of artificial intelligence and natural language processing. Traditional language models relied on statistical methods and rule-based approaches to understand and generate human language. However, with advancements in deep learning and the availability of vast amounts of text data, large language models have revolutionized the field.

Today, we see the evolution of language models from simple n-grams to sophisticated transformers like GPT-3. These models can generate coherent text, answer questions, and even engage in conversations that mimic human-like responses. Language models have come a long way in capturing the complexities of language structure and semantics, opening up new possibilities in various applications, including chatbots, language translation, and content generation.

The historical context and evolution of language models highlight the journey from basic language processing to the era of advanced AI-powered models. Understanding this progression is crucial for appreciating the capabilities and limitations of modern language models.

Basics of Machine Learning and Artificial Intelligence

Evolution in the field of Machine Learning and Artificial Intelligence laid the groundwork for the development of large language models. Machine learning algorithms enable systems to learn from data, identify patterns, and make decisions without explicit programming. Within machine learning, deep learning emerged as a subfield that focuses on neural networks and learning from data representations.

Artificial Intelligence encompasses a broader spectrum of technologies aimed at simulating human intelligence in machines. Language models are a prime example of AI applications that demonstrate the ability to understand and generate natural language. By leveraging machine learning techniques and neural networks, AI systems can perform tasks that were once thought to be exclusive to human cognition.

The fusion of machine learning and artificial intelligence has paved the way for the development of state-of-the-art language models that exhibit remarkable language understanding and generation capabilities. As we investigate deeper into the workings of large language models, it becomes evident that their foundation lies in the principles of machine learning and artificial intelligence.

Understanding Large Language Models

Despite the recent surge of interest and excitement around the applications of Large Language Models (LLMs), many are still unfamiliar with the concept and potential of these powerful tools. To research deeper into this topic, you can check out my blog post on Unlocking the Power of Large Language Models (LLMs). It provides a comprehensive guide that can help beginners grasp the fundamentals of LLMs and their significance in various fields.

Defining Large Language Models

Understanding Large Language Models requires familiarity with their core premise. These models, such as GPT-3 (Generative Pre-trained Transformer 3), are massive neural networks trained on vast amounts of text data. The objective is to enable the model to predict the next word in a sentence based on the context provided. This process allows LLMs to generate human-like text and perform a wide range of natural language processing tasks with remarkable accuracy and fluency.

Components and Architecture of LLMs

Any discussion on Large Language Models must touch upon their intricate components and architecture. These models consist of multiple layers of neurons that process input data through attention mechanisms and transformer blocks. The input text is tokenized and passed through the layers, which learn to assign significance to each token based on its context within the sequence. This hierarchical structure enables LLMs to capture complex linguistic patterns and generate coherent responses.

A deep look into the architecture reveals that LLMs also incorporate positional encoding to maintain the sequential order of tokens and prevent information loss during processing. Additionally, the attention mechanism plays a crucial role in identifying relevant tokens in the input sequence to generate contextually appropriate outputs. These sophisticated components work in unison to enhance the model’s ability to understand and generate human language.

Key Players in the LLM Arena

Your Beginner’s Guide to Building Machine Learning must include an understanding of the Key Players in the Large Language Model (LLM) arena. These models have revolutionized the field of natural language processing and are at the forefront of AI research and development. Each LLM brings its unique capabilities to the table, making it imperative to grasp the differences and applications of these models for anyone venturing into machine learning.

Overview of Prominent LLMs and Their Capabilities

One such key player in the LLM arena is GPT-3 (Generative Pre-trained Transformer 3), developed by OpenAI. GPT-3 is known for its vast size, with 175 billion parameters, enabling it to generate human-like text and perform a wide range of natural language tasks, from translation to summarization.

Another standout LLM is BERT (Bidirectional Encoder Representations from Transformers), created by Google. BERT excels in understanding context in language and is widely used for tasks like sentiment analysis, question answering, and more. Its bidirectional architecture allows it to capture deeper language relationships.

Understanding the capabilities of these prominent LLMs and how they can be leveraged in various applications is crucial for those looking to harness the power of machine learning in their projects.

Contributions of Big Tech: GPT-3, BERT, and More

Arena

BERT, along with other large language models like GPT-3, has been instrumental in pushing the boundaries of what AI can achieve in natural language understanding. These models have paved the way for advancements in chatbots, language translation, content generation, and more, demonstrating the immense potential of LLMs in transforming how we interact with AI systems.

Working with LLMs

With the rise of Large Language Models (LLMs) in natural language processing, data preparation and processing play a crucial role in the success of these models. The quality and quantity of training data directly impact the performance of LLMs. It is important to ensure that the data is clean, relevant, and diverse to capture the nuances of language effectively. Preprocessing steps such as tokenization, lemmatization, and sentence segmentation help in organizing the data in a format that is suitable for training LLMs. Additionally, techniques like data augmentation can be employed to increase the variability of the training data, leading to more robust language models.

Data Preparation and Processing

Effective data preparation and processing are the cornerstone of training successful Large Language Models. Preprocessing steps such as data cleaning, tokenization, and normalization are important to ensure the quality of training data. Removing noise, handling special characters, and standardizing the text format contribute to improving the model’s performance. It is also crucial to label and organize the data to facilitate supervised learning tasks. Furthermore, techniques like data augmentation, where existing data is modified or expanded, can help in enhancing the model’s ability to generalize and adapt to different linguistic patterns.

Training Large Language Models: Challenges and Techniques

The training of Large Language Models poses various challenges, including computational resources, training time, and fine-tuning techniques. Training large models requires significant computational power, memory, and storage capacities. Techniques like distributed training, where the workload is distributed across multiple GPUs or TPUs, can help in overcoming these challenges. Hyperparameter tuning, regularization techniques, and early stopping are important strategies to prevent overfitting and achieve optimal model performance. Employing techniques like transfer learning, where a pre-trained model is fine-tuned on specific tasks, can also accelerate the training process and improve the model’s performance.

Understanding the complexities and challenges involved in training Large Language Models is crucial for achieving optimal results. Addressing data quality, processing, and training challenges systematically can lead to the development of powerful language models that can effectively capture the intricacies of human language. By employing best practices in data preparation, processing, and training techniques, researchers and practitioners can unlock the full potential of Large Language Models and drive advancements in natural language processing applications.

Applications of Large Language Models

To

Natural Language Processing (NLP) Tasks

Natural Language Processing (NLP) tasks encompass a wide range of capabilities that large language models (LLMs) excel at. From sentiment analysis to text generation, machine translation to question answering, LLMs have revolutionized the way we interact with language. Sentiment analysis, for example, allows businesses to understand customer feedback at scale, while text generation can assist in content creation for various platforms.

Machine translation has also seen significant improvements with the advent of LLMs like GPT-3. The model can now translate languages with more accuracy and context awareness than ever before, making communication across different languages smoother and more efficient. Additionally, question-answering systems powered by LLMs are being used in various applications, from customer service chatbots to educational tools.

Overall, large language models have become indispensable in NLP tasks, offering unprecedented levels of accuracy and efficiency. Their ability to process and generate human-like language has opened up a wide range of possibilities for businesses and individuals alike, making complex language-related tasks more accessible and effective.

To

Transforming Industries with LLMs

Language models have the potential to transform industries across the board. From healthcare to finance, marketing to entertainment, LLMs are being deployed to streamline processes, drive innovation, and improve outcomes. In healthcare, for example, LLMs are being used to assist in diagnosing illnesses, analyzing medical research papers, and even providing personalized patient care recommendations.

In finance, large language models are being utilized for risk assessment, fraud detection, and investment analysis. Their ability to process and analyze vast amounts of textual data in real-time has revolutionized the way financial institutions operate, making them more efficient and accurate in decision-making processes. Similarly, in marketing and entertainment, LLMs are being leveraged to create targeted advertising campaigns, develop personalized content recommendations, and even generate scripts for movies and TV shows.

With the power of LLMs, industries are witnessing a significant shift towards automation, personalization, and optimization. Businesses that harness the capabilities of these models are gaining a competitive edge, as they can make data-driven decisions faster and more effectively. The potential of LLMs to transform industries is vast, and we are only scratching the surface of what they can achieve.

Evaluating LLM Performance

Unlike previous language models, large language models (LLMs) like GPT-3 have revolutionized natural language processing. In order to understand their performance and capabilities, it is vital to evaluate them using various metrics and benchmarks. For a comprehensive guide on how to assess LLMs, refer to Understanding Large Language Models: A Beginner’s Guide. This article provides a detailed overview of LLM evaluation techniques and best practices.

Metrics and Benchmarks for Assessing LLMs

Ethical Considerations and Bias Mitigation

LLMs have shown incredible proficiency in generating human-like text and completing diverse tasks. However, along with their impressive capabilities, ethical considerations and bias mitigation strategies are critical aspects that developers and users must address. The responsibility lies with developers to ensure that LLMs are trained on diverse and inclusive datasets to minimize biases in their outputs. Additionally, continuous monitoring and auditing of LLMs can help identify and rectify any ethical concerns or biases that may arise during their usage.

On the ethical front, it is crucial to implement measures that promote transparency and accountability in the development and deployment of LLMs. By adhering to ethical guidelines and standards, developers can enhance the trustworthiness and reliability of LLM applications. Additionally, involving diverse stakeholders in the design and evaluation processes can provide valuable insights and perspectives to mitigate potential ethical pitfalls. It is imperative to strike a balance between innovation and ethical responsibility when harnessing the power of LLMs for various applications.

Plus, it is vital to prioritize the ethical implications of LLMs to ensure that their deployment aligns with societal values and norms. By addressing ethical considerations and proactively implementing bias mitigation strategies, developers can harness the full potential of LLMs while promoting fairness and inclusivity in their applications. It is crucial to approach LLM development and usage with a vigilant and ethical mindset to navigate the complexities of language generation responsibly.

Leveraging LLMs for Innovation

Creative Uses of LLMs

Creative thinkers are now tapping into the vast potential of Large Language Models (LLMs) to revolutionize the way we approach innovation. By feeding these models with diverse datasets, researchers and entrepreneurs can generate novel ideas, concepts, and solutions that were previously unimaginable. One of the most exciting aspects of using LLMs for innovation is their ability to spark creativity and inspire breakthroughs in various fields.

The creative potential of LLMs extends beyond traditional problem-solving. These models can aid in artistic endeavors, assisting writers, musicians, and artists in generating new content. By leveraging the power of language generation, artists can collaborate with LLMs to co-create unique pieces of art, music, literature, and more. This synergy between human creativity and artificial intelligence opens up endless possibilities for innovation and expression.

Moreover, by harnessing LLMs for creative purposes, organizations can streamline their design and development processes, leading to more efficient and effective solutions. Whether it’s creating compelling marketing campaigns, designing captivating visuals, or crafting engaging storytelling, LLMs can revolutionize the way businesses approach creativity. Embracing the innovative potential of LLMs can give companies a competitive edge in the ever-evolving digital landscape.

Automating Content Creation and Beyond

LLMs have emerged as game-changers in automating content creation and beyond. These advanced language models can generate high-quality text across various formats, including articles, social media posts, product descriptions, and more. By training LLMs on specific datasets, businesses can automate repetitive writing tasks, freeing up valuable time and resources for higher-level creative work.

The Future Landscape of Large Language Models

Now that Large Language Models (LLMs) have established themselves as powerful tools in various applications, it is crucial to look towards the future and explore the potential advancements in this field. Ongoing research and development trends indicate a continuous effort to enhance the capabilities and efficiency of LLMs.

Ongoing Research and Development Trends

Large language models are now focusing on improving their ability to understand and generate more nuanced and contextually relevant language. Researchers are delving into areas such as commonsense reasoning, sentiment analysis, and enhancing multilinguality to make LLMs more versatile and adept at handling diverse tasks.

Moreover, continuous efforts are being made to address the issues of bias and fairness in LLMs. Researchers are exploring techniques to mitigate biases present in training data and ensure that LLMs promote inclusivity and equity in their outputs.

Another noteworthy trend is the exploration of more efficient and sustainable training methods for LLMs. Researchers are investigating ways to reduce the computational resources required to train large models, making them more accessible and environmentally friendly.

Anticipating the Next Generation of LLMs

For the next generation of Large Language Models, we can anticipate even more advanced capabilities and applications. These models are expected to possess enhanced reasoning abilities, better understanding of context, and improved performance across different languages and domains.

Trends indicate that future LLMs will prioritize interpretability and transparency, allowing users to understand how these models arrive at their outputs. Additionally, advancements in multitask learning and transfer learning techniques will enable LLMs to excel in a wide range of tasks with minimal fine-tuning.

Understanding the potential impact of these advancements is crucial to harnessing the power of next-generation LLMs effectively. As these models become more sophisticated, it is crucial to stay vigilant and ensure that they are developed and utilized responsibly to benefit society as a whole.

Practical Guidance for Beginners

Getting Started with LLMs

All beginners who are venturing into the world of Large Language Models (LLMs) need to start with a solid foundation. It is imperative to familiarize yourself with the basics of how these models work, what they can be used for, and the potential challenges they present. Start by learning about the architecture, training process, and applications of LLMs. Dive into tutorials and documentation provided by the developers of popular models such as GPT-3, BERT, or T5. Make sure to get hands-on experience by experimenting with pre-trained models and fine-tuning them on specific tasks.

Experimenting with small datasets and simpler tasks can help you grasp the concepts of working with LLMs before stepping into more complex projects. Building a strong foundation in natural language processing (NLP) concepts and machine learning principles will also be beneficial. Stay curious and keep exploring different use cases and applications of LLMs to broaden your understanding and skill set.

Keep in mind, patience is key when starting out with LLMs. It may take time to fully understand and harness the power of these models, but with dedication and persistence, you can unlock their potential. Collaborating with peers, joining online communities, and attending workshops or webinars can also help you learn from others and stay updated on the latest trends in LLMs.

Best Practices and Resources for Further Learning

On your journey to mastering LLMs, it is crucial to follow best practices and leverage resources that can aid in your learning process. Document your experiments, findings, and learnings to track your progress and refer back to them when needed. Utilize online platforms like GitHub, Medium, or Kaggle to share your work and collaborate with other enthusiasts in the field. Engaging in open-source projects can also enhance your skills and knowledge.

Explore a variety of resources such as research papers, blogs, online courses, and conferences to stay updated on the latest advancements in LLMs. Networking with experts in the field and seeking mentorship can provide valuable insights and guidance. Continuous learning and staying curious are imperative to mastering LLMs and staying ahead in this rapidly evolving field.

LLMs have the potential to revolutionize various industries and bring about significant advancements in natural language understanding. However, it is important to approach their development and deployment with caution. Understanding the ethical implications, biases, and limitations of LLMs is critical to ensure responsible use and mitigate potential risks. By following best practices, staying informed, and continuously learning, beginners can unlock the power of LLMs and contribute to the advancement of artificial intelligence.

To wrap up

Considering all points discussed in this beginner’s guide to Large Language Models (LLMs), it is evident that these AI-powered tools have the potential to revolutionize various industries and transform the way we interact with technology. From generating human-like text to assisting in data analysis and decision-making processes, LLMs offer a wide range of possibilities for businesses, researchers, and individuals alike. By understanding the underlying principles and applications of LLMs, beginners can harness the power of these sophisticated tools to optimize their work and achieve better results.

As we probe deeper into the world of LLMs, it becomes evident that continuous learning and experimentation are crucial for unlocking their full potential. By staying updated on the latest advancements in machine learning and natural language processing, beginners can leverage the capabilities of LLMs to tackle complex tasks and drive innovation in their respective fields. Moreover, by adhering to ethical guidelines and embracing responsible AI practices, individuals can ensure that LLMs are used for the greater good and benefit of society as a whole.

Hence, embracing the power of Large Language Models opens up a world of possibilities for beginners looking to explore artificial intelligence and natural language processing. By taking a proactive approach to learning and implementation, individuals can unlock the true potential of LLMs and leverage their capabilities to drive innovation and make a positive impact in the digital age. With a solid foundation in the fundamentals of LLMs and a commitment to ethical practices, beginners can pave the way for a future where AI technologies enhance human potential and transform the way we interact with the world.

FAQ

Q: What are Large Language Models (LLMs)?

A: Large Language Models (LLMs) are sophisticated artificial intelligence systems that process and understand human language at scale. They are designed to generate coherent and contextually relevant text, making them valuable tools for various natural language processing tasks.

Q: How do Large Language Models work?

A: LLMs leverage deep learning techniques, particularly neural networks, to process vast amounts of text data. They use pre-trained parameters and algorithms to understand patterns, semantics, and relationships within the language. When given a prompt, LLMs generate text based on the learned patterns and context.

Q: What are the applications of Large Language Models?

A: Large Language Models have a wide range of applications, including text generation, language translation, sentiment analysis, content summarization, and conversational agents. They are used in industries such as healthcare, finance, customer service, and content creation.

Q: How can beginners unlock the power of Large Language Models?

A: Beginners can start by familiarizing themselves with the basics of natural language processing and deep learning. They can then explore pre-trained LLMs like GPT-3 or BERT through online platforms or APIs to understand their capabilities and experiment with text generation tasks.

Q: What are some tips for effectively using Large Language Models?

A: To maximize the utility of Large Language Models, it is imperative to provide clear and specific prompts, fine-tune the models on domain-specific data if necessary, and critically evaluate the generated output for accuracy and coherence. Additionally, staying updated on the latest advancements in LLM research can help users harness their full potential.