Just as you begin on your journey through AI cloud development, you must navigate a landscape rife with security challenges. As the integration of artificial intelligence grows, so do the risks of data breaches and misuse of algorithms. Your understanding of best practices and proactive measures is vital to safeguarding your systems and ensuring compliance. This post will guide you through imperative strategies to mitigate risks while enhancing the benefits of AI in the cloud.

Unpacking the Threat Landscape in AI Cloud Development

Your quest in AI cloud development exposes you to various security threats that can compromise your projects and data. The rapid evolution of technology creates an environment where both traditional and emerging threats coexist. Understanding these threats is key to developing robust security strategies that protect your AI solutions from exploitation and ensure compliance with industry standards.

Emerging Cyber Vulnerabilities

A variety of emerging cyber vulnerabilities continue to pose significant risks in AI cloud environments. These include threats related to machine learning models, such as adversarial attacks, where attackers manipulate input data to mislead models into making inaccurate predictions. Additionally, inadequately secured APIs can serve as gateways for attacks, enabling unauthorized data access and manipulation.

Impact of Data Breaches on AI Projects

The repercussions of data breaches in AI projects can be severe, leading to loss of intellectual property, customer trust, and substantial financial losses. Following incidents like the 2020 Twitter hack, where high-profile accounts were compromised, the potential for reputational damage looms large. This not only disrupts the development cycle but also leads to resource intensive investigations and potential legal ramifications.

Consequences extend far beyond immediate financial impacts; for example, a 2021 report revealed that the average cost of a data breach was $4.24 million, which can be catastrophic for startups and established firms alike. Data breaches can impede innovation, as teams divert focus and funds from development to recovery efforts, potentially threatening your competitive advantage in a rapidly evolving market. Furthermore, if sensitive training data is compromised, it undermines the integrity of your AI models and can lead to ethical concerns around data misuse. Protecting your AI projects from these breach-related issues is vital for both sustainability and growth.

Regulatory Frameworks: Navigating Compliance Pitfalls

As AI cloud development expands, understanding the intricate web of regulatory frameworks becomes imperative. Compliance pitfalls can lead to significant repercussions, including fines and reputational damage. Thoroughly navigating these regulations, like GDPR and CCPA, requires a proactive approach to data privacy and security practices in your organization. For insight on the intersection of AI, cloud security, and compliance strategies, see How do AI and Cloud Computing Affect Security Risks? | CSA.

Understanding Global Data Protection Standards

Global data protection standards, such as GDPR in the EU and CCPA in California, dictate strict guidelines around data handling and privacy. Familiarizing yourself with these requirements ensures your AI cloud applications do not unknowingly compromise user data. Non-compliance can result in hefty penalties, so integrating these standards into your development process is vital.

Adapting to Evolving AI Legislation

AI legislation is an ever-evolving landscape, requiring you to stay informed on new laws aimed at regulating the use of AI technologies. These laws, often enacted in response to emerging technologies and societal concerns, can vary significantly across regions. Continuous monitoring of AI regulations ensures your cloud solutions remain compliant and secure.

The dynamic nature of AI legislation means that you must be proactive in revising your compliance strategies. As governments around the world introduce rules governing ethical AI use, staying ahead of these changes is imperative. Engaging with legal experts who specialize in technology law can provide guidance on adapting your cloud solutions to meet upcoming legislative requirements. Regularly attending workshops and conferences focused on AI legislation will help you grasp potential impacts early, enabling you to restructure your compliance framework effectively.

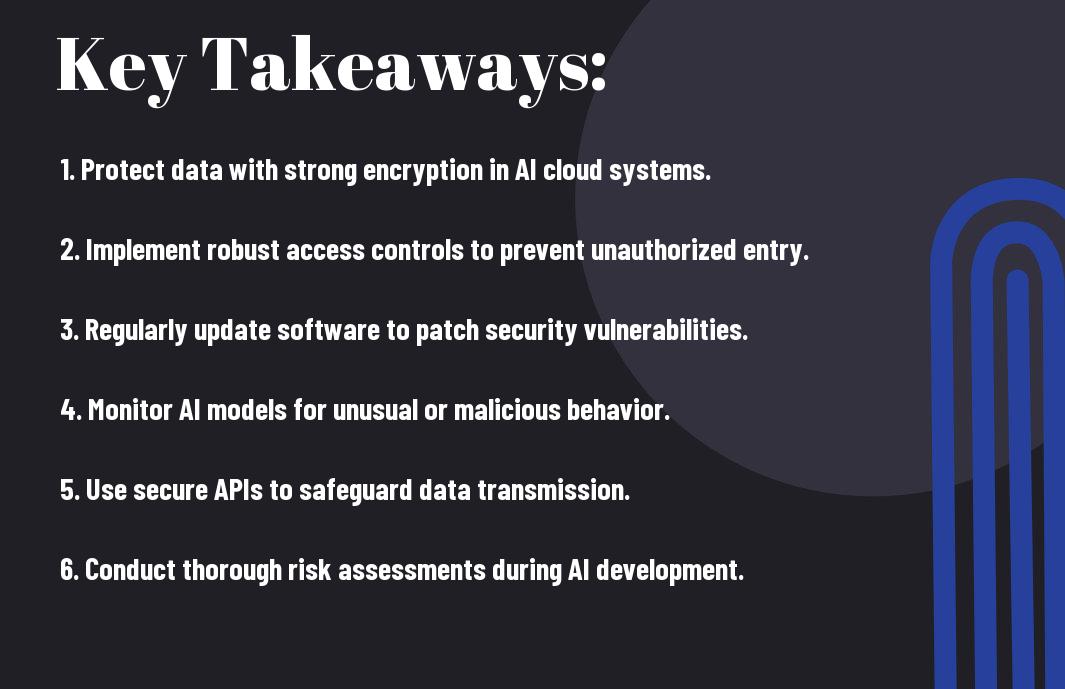

Strategies for Robust Security Protocols

Your approach to security protocols should be multi-faceted, incorporating encryption, access controls, and regular security audits. Automating security tasks can further enhance your effectiveness in identifying and mitigating vulnerabilities. By integrating these measures at every stage of your AI cloud development lifecycle, you not only secure your data but also bolster consumer confidence.

Integrating AI-Specific Security Measures

AI systems require tailored security approaches, such as implementing adversarial training to defend against specific threats. Additionally, using machine learning algorithms to continuously monitor for unusual activity can help you quickly identify potential breaches or anomalies, keeping your environment secure and resilient against evolving threats.

Building a Culture of Security Awareness

Instilling a security-first mindset across your team is crucial for long-term protection. Providing regular training sessions and updates on emerging threats helps foster an environment where everyone is vigilant and proactive about security measures.

To build this culture effectively, engage your team with interactive workshops that simulate potential attacks and responses. Share real-world case studies of breaches relevant to your industry, emphasizing lessons learned. Encourage open discussions about security vulnerabilities that can foster trust and accountability. Reward employees who proactively identify security concerns, turning awareness into a shared responsibility that permeates your organization.

The Role of Continuous Monitoring and Response

Continuous monitoring and response are vital components in managing the security landscape of AI cloud development. Daily, threats evolve and sophisticated attacks emerge, necessitating a proactive stance on security. Utilizing metrics and analytics allows you to recognize trends and vulnerabilities in real time. For a deeper understanding, check out Navigating the AI Security Risks: Understanding the Top 10 ….

Implementing Real-time Security Oversight

Establishing real-time security oversight involves implementing tools that continuously scan your systems for irregularities and potential breaches. Automated alert systems can notify your team of suspicious activities, allowing immediate investigation and mitigation. Integrating machine learning can assist in identifying patterns of malicious behavior, enhancing your overall security framework.

Developing a Rapid Response Framework

A robust rapid response framework equips your organization to react swiftly to security incidents, minimizing damage. This includes having a designated response team trained to manage breaches, clearly defined communication strategies, and established protocols that guide actions during an incident. This framework not only helps in mitigating immediate threats but also aids in preventing future occurrences by analyzing past incidents.

Investing in a rapid response framework is imperative for maintaining resilience against security threats. By conducting regular drills and simulations, you can prepare your team to handle various compromise scenarios efficiently. Analyzing past incidents, such as data breaches in large organizations, reveals that timely communication and decisive action drastically reduce recovery time. This ensures your team is adept at navigating uncertainties while fostering a culture of security awareness across your organization.

Cultivating Trust in AI Cloud Solutions

Building trust in AI cloud solutions hinges on demonstrating a commitment to security, transparency, and ethical practices. As organizations implement these technologies, prioritizing strong security measures enhances confidence among users and stakeholders. Ensuring robust security is not just about protecting data but also about addressing cloud security issues effectively. Insights from How AI Is Solving 6 Cloud Security Issues indicate that AI can significantly enhance security protocols, thus fortifying users’ trust.

Transparency and Ethical Considerations

Transparency is important in fostering trust in AI cloud solutions. You need to disclose how AI models are trained, the data utilized, and the methods applied to safeguard against biases or unethical outcomes. Being open about the decision-making processes and potential limitations of the AI systems helps cultivate a culture of responsibility and builds stakeholder confidence.

Engaging Stakeholders Through Open Communication

Open communication is vital for engaging stakeholders. Regular updates and forums can enable you to address concerns, share advancements, and solicit feedback on AI cloud development. This proactive approach not only enhances the relationship with users but also ensures that their voices are considered in upcoming enhancements. Creating a continuous dialogue encourages a sense of community and shared responsibility.

Inviting stakeholders to actively participate in discussions about AI cloud solutions can foster a collaborative environment. You could establish regular meetings, webinars, or online platforms specifically focused on AI developments. This not only provides transparency but also allows for a variety of perspectives to shape the technology’s evolution. Engaging end-users and clients can also spur innovative ideas and improvements while aligning your initiatives with their needs and expectations. Ultimately, this open communication strategy helps to forge lasting trust and loyalty in your AI cloud offerings.

Summing up

So, as you venture into AI cloud development, staying vigilant about security challenges is vital for safeguarding your projects and data. By adopting best practices such as robust access controls, continuous monitoring, and regular updates, you can effectively mitigate risks. Educating yourself on emerging threats will empower you to create a more secure environment. Embrace these strategies to enhance your overall security posture, ensuring a safer and more resilient cloud experience for your AI initiatives.