This guide will help you uncover the key benefits of integrating Variable Length Language Models (VLLM) into your machine learning strategy. By adopting VLLM, you can enhance efficiency and achieve superior performance in natural language processing tasks. Whether you’re aiming to improve data processing speed or increase the adaptability of your models, understanding the advantages of VLLM can empower you to elevate your machine learning initiatives. Dive deeper to explore how VLLM can transform your approach and deliver impactful results.

Key Takeaways:

- Efficiency: VLLM significantly enhances computational efficiency, allowing for faster model training and deployment.

- Scalability: Implementing VLLM provides robust scalability options, accommodating larger datasets and model sizes without compromising performance.

- Cost-Effectiveness: By reducing resource consumption, VLLM helps in lowering operational costs associated with machine learning projects.

- Flexibility: VLLM supports various architectures, making it adaptable to different machine learning frameworks and use cases.

- Improved Accuracy: Utilizing VLLM can lead to enhanced model accuracy through optimized data handling and processing strategies.

Understanding VLLM

To fully appreciate the impact of VLLM on your machine learning strategy, it’s imperative to grasp its core components and functionalities. VLLM, or Very Large Language Model, is designed to enhance the efficiency and scalability of language models, enabling you to process vast amounts of data with remarkable accuracy and speed.

Definition of VLLM

To define VLLM, envision it as an advanced framework that integrates massive data sets and cutting-edge algorithms to produce language models capable of understanding and generating human-like text. This technology facilitates complex language tasks, making it a significant asset for any ML strategy.

How VLLM Works

Even though the intricate workings of VLLM can be complex, it primarily focuses on breaking down language processing into manageable segments, allowing for efficient training on extensive data sets. This enables you to derive insights and generate language outputs that closely mimic human interactions.

Another key aspect of how VLLM works involves its ability to leverage parallel processing, which drastically reduces training time and increases model accuracy. By utilizing distributed computing resources, VLLM not only enhances its training efficiency but also improves scalability. This means you can tackle larger and more diverse datasets, yielding outputs that are more relevant and precise. The architecture of VLLM allows for continuous learning, enabling it to adapt and improve over time, thus ensuring sustained high performance in your machine learning endeavors.

Key Benefits of VLLM

One of the most significant advantages of integrating VLLM into your ML strategy is its ability to streamline processes and reduce costs. By utilizing the capabilities of a virtual large language model, your operations can become more responsive and adaptable to complex challenges. For a deeper understanding, check out How to Use vllm: A Comprehensive Guide in 2024.

Improved Efficiency in ML Processes

While implementing VLLM, you can significantly enhance the efficiency of your machine learning workflows. The automation and scalability that come with VLLM allow you to allocate resources more judiciously, resulting in time and cost savings across various projects.

Enhanced Model Performance

Improved performance is another key benefit you’ll experience when utilizing VLLM in your ML strategy. As you incorporate VLLM, you’ll notice that models become more responsive and capable of handling diverse data sets effectively. This leads to outcomes that are not only accurate but also significantly faster, allowing you to keep pace in a rapidly evolving landscape.

Benefits of enhanced model performance can be transformative for your organization. The ability of VLLM to better understand context and produce high-quality outputs means you’ll achieve a more reliable foundation for decision-making. Plus, the scalability offered can handle growing data requirements without compromising on speed or accuracy, ultimately impacting your bottom line positively.

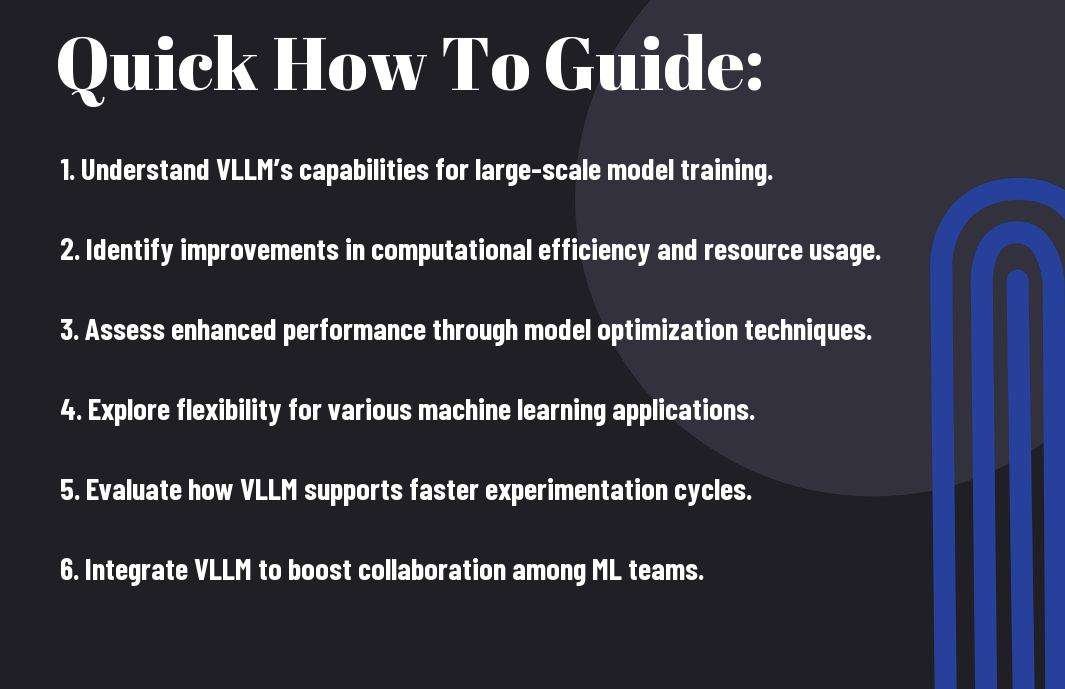

How to Implement VLLM in Your ML Strategy

Many organizations are discovering the advantages of implementing VLLM in their machine learning strategies. To successfully integrate VLLM, you’ll need to assess your existing infrastructure, choose the right tools and frameworks, and ensure proper training for your team. This strategic approach will allow you to harness the full potential of VLLM and enhance the effectiveness of your machine learning initiatives.

Steps for Implementation

Steps to implement VLLM include evaluating your current ML framework, identifying the specific requirements for VLLM integration, selecting suitable libraries or platforms, and conducting thorough testing. As you progress, make sure to gather feedback and iterate on your strategy to optimize performance.

Best Practices for Integration

Some best practices for integrating VLLM into your ML strategy involve clear documentation, proactive communication within your team, and a phased rollout to mitigate risks. Prioritize your data management and ensure robust monitoring systems are in place to track performance.

With clear documentation and proactive communication, you can enhance team collaboration throughout the VLLM integration process. Implementing a phased rollout allows you to address any potential issues early on, minimizing risks to your overall ML strategy. Additionally, focus on maintaining a strong emphasis on data management to ensure the integrity and security of your machine learning models while enabling robust monitoring systems to evaluate performance continuously.

Tips for Maximizing VLLM Benefits

Your approach to implementing VLLM can significantly enhance its effectiveness in your ML strategy. To maximize the benefits, consider these tips:

- Leverage scalable architectures for performance

- Optimize data preprocessing techniques

- Incorporate regular updates to your models

- Utilize effective feedback loops for continuous learning

Thou will find more actionable insights by visiting Decoding vLLM: Strategies for Your Language Model ….

Effective Training Techniques

An necessary aspect of VLLM implementation is adopting effective training techniques. Focus on diverse datasets and incremental learning to adapt your model to varying scenarios. Also, utilizing transfer learning can significantly enhance your model’s performance. By strategically combining these methods, you can ensure your VLLM remains robust and efficient.

Monitoring and Evaluation Strategies

While implementing VLLM, consistent monitoring and evaluation are key to ensuring optimal performance. Tracking your model’s effectiveness allows you to make informed adjustments as needed, keeping your systems aligned with your goals and objectives.

Tips for effective monitoring include setting up real-time performance metrics to gauge model accuracy and evaluating dataset relevance regularly. Additionally, be attentive to possible model drift by regularly reviewing outputs to ensure your VLLM aligns with changing data trends and demands. By focusing on these areas, you can maintain the effectiveness of your VLLM strategy and adapt swiftly to new challenges.

Factors to Consider Before Adopting VLLM

Once again, as you explore the integration of VLLM into your ML strategy, it’s crucial to evaluate various factors. Consider the following:

- Your current infrastructure capabilities

- The team expertise and readiness

- The overall cost implications

- Scalability potential for future growth

This analysis will help you determine if VLLM aligns with your strategic objectives.

Infrastructure and Resources

Resources play a significant role when implementing VLLM. You must assess whether your existing hardware and software can support the demands of VLLM technology. Ensuring your resources can handle the processing power and data storage required will enhance your ML model’s efficiency. Investing in the right infrastructure upfront could save you from potential challenges down the line.

Team Readiness and Expertise

Team readiness is fundamental to a successful VLLM adoption. You need to evaluate whether your current team possesses the necessary skills and knowledge to implement and maintain this advanced technology effectively. Your team should be ready for training opportunities and capable of adapting to new workflows associated with VLLM.

This readiness is more than just a willingness to learn; it entails having a solid foundation in machine learning, data management, and VLLM-specific tools. If your team lacks this expertise, seeking out specialized training or hiring new talent may be necessary. Without proper expertise, you risk encountering implementation roadblocks and inefficiencies. A strong team enhances collaboration, leading to effective problem-solving and ultimately a successful integration of VLLM into your strategy.

Common Challenges and Solutions

Despite the numerous advantages of vLLM in your ML strategy, implementing it can present challenges. You may encounter issues such as integration complexities or scalability concerns. However, understanding these hurdles can pave the way for effective solutions. For an insightful overview, check out the Introduction to vLLM and PagedAttention.

Identifying Potential Obstacles

Solutions to the challenges of vLLM implementation often begin with a comprehensive evaluation of your existing systems. Conduct thorough assessments to pinpoint technical constraints and resource allocation that could hinder integration. This proactive approach will equip you with the knowledge to devise effective strategies.

Strategies for Overcoming Challenges

While tackling challenges in your vLLM implementation, adopting a systematic approach can yield positive outcomes. Engage in collaborative problem-solving sessions with your team to foster innovative ideas and solutions. Also, consider leveraging community resources and tutorials to enhance your understanding and skills.

Common strategies include forming a cross-functional team that prioritizes open communication to address issues promptly. Additionally, investigating custom solutions tailored to your unique requirements can significantly enhance performance. Regular training ensures your team remains updated on best practices, while utilizing community forums can provide you access to shared experiences. By addressing these elements effectively, you can create a more sustainable path forward for your vLLM integration.

Summing up

To wrap up, implementing VLLM in your ML strategy can significantly enhance your model’s performance while optimizing resource utilization. By leveraging the benefits of reduced memory consumption and improved speed, you can effectively scale your machine learning projects to meet evolving demands. This not only enables you to tackle larger datasets more efficiently but also allows for quicker iterations and deployments, giving you a competitive edge. Embracing VLLM means investing in a modern framework that positions your machine learning initiatives for long-term success.

Q: What are the primary advantages of incorporating VLLM into my machine learning strategy?

A: Implementing VLLM (Very Large Language Models) in your machine learning strategy offers several benefits. First, these models are adept at understanding and generating human-like text, which enhances natural language processing tasks such as chatbots, translation services, and content generation. Second, VLLMs facilitate a deeper understanding of context and semantics, leading to improved accuracy in predictions and decision-making processes. Lastly, their ability to fine-tune on specific datasets allows organizations to customize the model for particular applications, resulting in tailored solutions that meet unique business needs.

Q: How does VLLM improve the efficiency of machine learning workflows?

A: VLLM enhances the efficiency of machine learning workflows in multiple ways. Firstly, its ability to process large datasets quickly aids in reducing the time required for training models, allowing teams to iterate faster and deploy solutions sooner. Secondly, VLLMs can integrate seamlessly with various tools and frameworks, minimizing compatibility issues and streamlining the development process. Additionally, by automating many tasks within the workflow, such as feature extraction and data preprocessing, VLLMs enable data scientists to focus on higher-level problem-solving rather than mundane tasks.

Q: In what ways can VLLM contribute to the scalability of machine learning applications?

A: VLLM significantly contributes to scalability by offering robust performance even as the volume of data and the number of applications grow. Its architecture is designed to handle vast amounts of input data without compromising on efficiency or response time. With VLLMs’ capacity to learn continuously, organizations can easily adapt to new data and changing requirements, allowing for flexible scaling. Furthermore, VLLMs can also serve multiple applications or user scenarios simultaneously, providing a cost-effective solution for businesses looking to expand their machine learning capabilities without needing to develop separate models for each use case.