ML technologies are evolving rapidly, and understanding the distinctions between LLM Ops and traditional ML Ops practices is crucial for effectively managing your models. In this blog post, you will explore how LLM Ops focuses on optimizing large language models, which requires unique strategies and considerations not typically found in conventional ML workflows. By grasping these differences, you’ll enhance your ability to deploy, monitor, and maintain your models successfully, ensuring efficiency and performance in your projects.

Key Takeaways:

- Model Size and Complexity: LLM Ops need to handle larger models with billions of parameters, leading to unique challenges in deployment and scalability.

- Data Management: The volume and variety of data required for training LLMs differ significantly, necessitating more sophisticated data pipelines and management practices.

- Training Efficiency: LLM Ops emphasize the need for enhanced compute resources and optimization strategies to ensure efficient training, requiring investment in specialized hardware.

- Continuous Learning: Unlike traditional ML, LLMs often benefit from fine-tuning on evolving datasets, making ongoing model updates a core part of the operational process.

- Evaluation Metrics: The assessment criteria for LLMs often diverge, focusing on language understanding and generation quality rather than standard accuracy metrics used in traditional ML models.

Understanding LLM Ops

While traditional ML Ops focuses on streamlining the deployment and management of machine learning models, LLM Ops encompasses specialized practices to optimize large language models (LLMs) throughout their lifecycle. This includes unique considerations for scalability, performance, and ethical deployment, enabling you to handle the complexity and demands of these advanced models effectively.

Definition and Overview

Some might view LLM Ops as an extension of ML Ops, yet it emphasizes specific challenges and methodologies related to large language models. These models require distinct operational frameworks to ensure performance, usability, and compliance with legal standards, making LLM Ops both imperative and specialized.

Key Features of LLM Ops

To fully appreciate LLM Ops, consider its key features, which include:

- Scalability: Ensure systems can handle increased data and model size.

- Performance Monitoring: Track metrics specific to language generation efficiency.

- Data Privacy: Maintain user confidentiality while processing large datasets.

- Model Interpretability: Enhance understanding to clarify model decisions.

- Compliance and Ethics: Address fair usage and avoid biased responses.

Thou must also recognize how these features uniquely shape your approach to deploying LLMs, ensuring their safe and effective operation.

With the growing prominence of LLMs, focusing on these key features becomes imperative. You need to prioritize:

- Robust Infrastructure: Invest in systems that support high computational loads.

- Feedback Loops: Create mechanisms for continual model improvement based on user input.

- Automation Tools: Utilize automation to streamline the model deployment process.

- Cross-disciplinary Teams: Foster collaboration among data scientists, ethicists, and domain experts.

- Version Control: Implement strategies for tracking changes and ensuring model stability.

Thou can better leverage these features to build more effective and responsible language models in your operations.

Comparing LLM Ops and Traditional ML Ops

If you’re exploring into the world of LLM Ops, understanding how it differs from traditional ML Ops is key. Below is a concise comparison highlighting these differences in operational practices:

| Aspect | LLM Ops | Traditional ML Ops |

|---|---|---|

| Model size | Large and complex | Generally smaller |

| Deployment frequency | Less frequent, higher resource demand | More frequent and agile |

| Data requirements | Extensive datasets for training | Moderate datasets |

| Scalability | Complex scaling strategies | Simpler scaling processes |

Fundamental Differences

Little attention has been paid to the nuances between LLM Ops and traditional ML Ops until now. The primary difference lies in the scale and complexity of the models involved, which affects data handling, resource management, and deployment strategies.

Unique Challenges Faced

For those involved in LLM Ops, unique challenges can arise that are often not encountered in traditional ML Ops. These include managing the massive computational resources required, ensuring data quality and relevance, and navigating ethical considerations surrounding the outputs of large language models.

With the growth of large language models, you may find yourself facing substantial infrastructure demands, leading to increased costs, and a greater need for optimization. Moreover, the need to mitigate bias and ensure responsibility in AI applications can further complicate workflows, pushing you to constantly evolve your operational tactics and respond to emerging needs in a highly dynamic environment.

How to Implement LLM Ops

Unlike traditional ML Ops, implementing LLM Ops requires a nuanced understanding of language models and their unique demands. You can explore the differences further by checking out What is the difference between MLOps and DevOps?. To successfully integrate LLM Ops, ensure your infrastructure supports large-scale training and inference needs, while fostering collaboration among data scientists and engineers.

Essential Steps for Integration

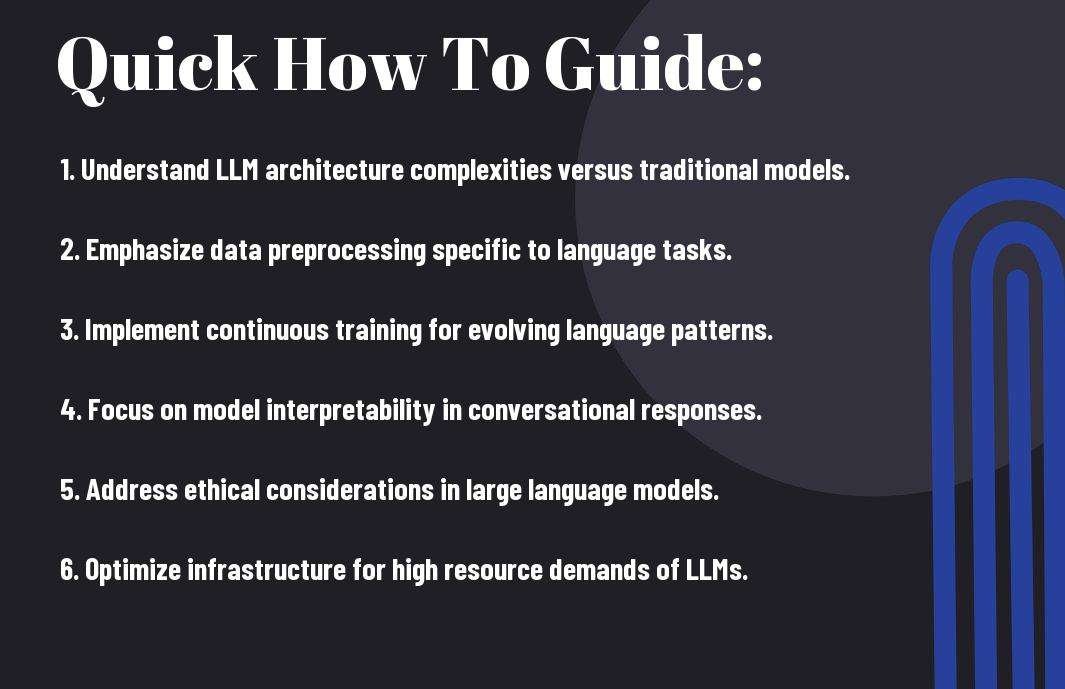

Implement an effective LLM Ops workflow by establishing a dedicated team, refining your data pipeline, and automating model deployment. Key steps include:

- Team Collaboration: Foster communication between data scientists and IT.

- Data Management: Ensure high-quality datasets for training.

- Monitoring: Implement feedback loops for model improvements.

The successful integration leads to well-tuned models that deliver better performance.

Tips for Best Practices

Essential for maximizing the effectiveness of your LLM Ops, adhering to best practices can streamline your processes. Keep in mind the following:

- Regular Evaluations: Continuously assess model performance.

- Version Control: Maintain consistent model versions for reproducibility.

- Ethics and Compliance: Follow ethical guidelines in model training.

The outcomes of these efforts shape reliable and ethically sound models that meet your organizational goals.

Practices like continuous integration and deployment are vital for your LLM Ops success. Focus on:

- Automated Testing: Ensure every model update undergoes rigorous testing.

- Scalability: Design systems that can grow with your needs.

- User Feedback: Incorporate end-user insights post-implementation.

The adoption of these practices creates a responsive and adaptive approach to model management, enhancing reliability and effectiveness.

Factors Influencing LLM Ops Success

Not every aspect of your operations may align seamlessly with LLM Ops. Key factors influencing its success include:

- Data Quality

- Model Performance

- Infrastructure Scalability

- Team Expertise

- Collaboration Practices

Perceiving these factors will enable you to tailor your approach effectively.

Technology and Tools

To achieve efficient LLM Ops, leveraging the right technology and tools is imperative. This includes choosing frameworks that support large language models, utilizing cloud computing resources for scalability, and employing monitoring tools to keep track of performance metrics. The alignment of your infrastructure with your operational needs will significantly enhance your model’s capabilities.

Team Skills and Collaboration

Skills in machine learning, data engineering, and communication are vital for successful collaboration in LLM Ops. A multidisciplinary team that openly shares knowledge and responsibilities fosters innovation and boosts productivity.

Another factor to consider is that skills and collaboration profoundly impact LLM Ops outcomes. Your team must possess a blend of technical and interpersonal skills, including expertise in natural language processing and DevOps practices. Regularly scheduled collaborative sessions can lead to enhanced problem-solving and creativity. With a proactive approach to team dynamics, you can address challenges early and capitalize on opportunities, ultimately ensuring a more effective and streamlined implementation of LLM Ops.

Tips for Managing LLM Projects

Now, as you launch on your LLM projects, focus on the following tips to ensure success:

- Establish clear communication channels

- Leverage data quality assessment

- Implement iterative feedback loops

- Foster a culture of collaboration

- Utilize advanced monitoring tools

Recognizing the unique attributes of LLMs can significantly enhance your project outcomes. For more insights, check out Breaking it Down: MLOps vs DevOps – What You Need to ….

Setting Clear Objectives

With your LLM projects, it’s vital to define precise objectives early on. This helps align your team’s efforts and ensures that everyone works towards common goals, ultimately increasing the efficiency and effectiveness of your project. Involve all stakeholders in this process to better understand perspectives and establish measurable outcomes.

Continuous Monitoring and Adjustments

Clearly, ongoing observation of your LLM systems is vital for their performance. Regularly check the outputs, and evaluate the models against your initial objectives to track progress and make necessary adjustments. You need to adapt your strategies based on real-time feedback, ensuring that the project remains aligned with its goals.

Continuous monitoring allows you to identify anomalies and discrepancies in the system’s performance, enabling timely corrections. It’s important to use automated tools for analyzing data patterns and model outputs to mitigate risks associated with drift or overfitting. You should also involve key team members in this process, fostering collective responsibility for your project’s success. By staying proactive in adjustments, your LLM projects will be well-positioned to meet evolving demands and achieve optimal results.

Future Trends in LLM Ops

Once again, the landscape of LLM Ops is evolving rapidly, with organizations increasingly adopting these advanced models. As researchers and practitioners continue to refine approaches, you can stay informed on the latest developments and best practices that distinguish LLM Ops from traditional ML Ops. For a comprehensive overview, check out the MLOps Definition and Benefits to understand how these practices can enhance your operations.

Innovations on the Horizon

The integration of cutting-edge technologies such as autoML, enhanced data pipelines, and real-time analytics is poised to revolutionize LLM Ops. These innovations will enable you to streamline workflows, improve model accuracy, and enhance the overall quality of your applications.

Potential Industry Impact

Little do many realize that the advancements in LLM Ops could significantly reshape various industries. From automating customer service to transforming content creation, you can anticipate a broad range of applications that will optimize efficiency.

With the adoption of LLM Ops, industries will likely see a dramatic shift in operational dynamics. You could benefit from increased productivity and enhanced decision-making capabilities as the models enable faster processing of vast data sets. However, you should also be aware of the challenges, such as the potential for bias in algorithms and the need for responsible deployment. The transformative power of LLM Ops holds the promise of unprecedented opportunities, yet it also necessitates careful attention to ethical considerations and best practices.

Final Words

Now that you understand the distinct nature of LLM Ops compared to traditional ML Ops practices, you can appreciate how this specialized approach enhances your model management, deployment, and monitoring processes. With the unique requirements of large language models, such as handling massive datasets and ensuring effective scalability, you are equipped to navigate the complexities and leverage the full potential of LLMs in your projects. By adopting these practices, you can ensure improved performance, reliability, and alignment with your AI goals.

Q: What are the key differences between LLM Ops and traditional ML Ops practices?

A: The primary distinction lies in the scale and complexity of the models used. LLM Ops focuses on managing large language models, which have billions of parameters and require substantial computational resources. This necessitates a different approach to data handling, infrastructure, and continuous integration/continuous deployment (CI/CD) pipelines. Furthermore, LLM Ops prioritizes prompt engineering and fine-tuning over traditional feature engineering, as the effectiveness of language models often relies on how well they understand and generate human-like text.

Q: How does data management differ in LLM Ops compared to traditional ML Ops?

A: Data management in LLM Ops involves dealing with vast amounts of unstructured data used for training large language models. Unlike traditional ML models, which often utilize structured datasets, LLMs can benefit from diverse data sources, such as text from books, articles, and social media. This requires specific data preprocessing techniques to ensure quality, relevance, and ethical considerations. Additionally, versioning of datasets is more complex, as data provenance must be tracked to assess its impact on model performance over time.

Q: What unique challenges do LLM Ops face that traditional ML Ops do not?

A: One of the significant challenges in LLM Ops is the need for extensive computational resources for training and inference, resulting in increased operational costs and energy consumption. Moreover, LLMs can generate outputs that are unpredictable or exhibit biases present in the training data, making monitoring and evaluating their performance more complex. Ensuring model transparency and explainability becomes vital, necessitating additional frameworks and tools not typically required in traditional ML Ops practices. Maintaining ethical standards in deployment also poses a unique challenge, as the repercussions of harmful outputs can be more pronounced with LLMs.