Designing effective prompts is imperative for maximizing the potential of large language models (LLMs) in your projects. Whether you are a beginner or have some experience, understanding how to craft prompts can greatly impact the quality of your outputs. In this guide, you will learn key strategies to ensure your prompts yield useful and relevant responses. By harnessing proven techniques and avoiding common pitfalls, you can elevate your interactions with LLMs. For further insights, explore the Prompt Engineering of LLM Prompt Engineering community to enhance your learning.

Key Takeaways:

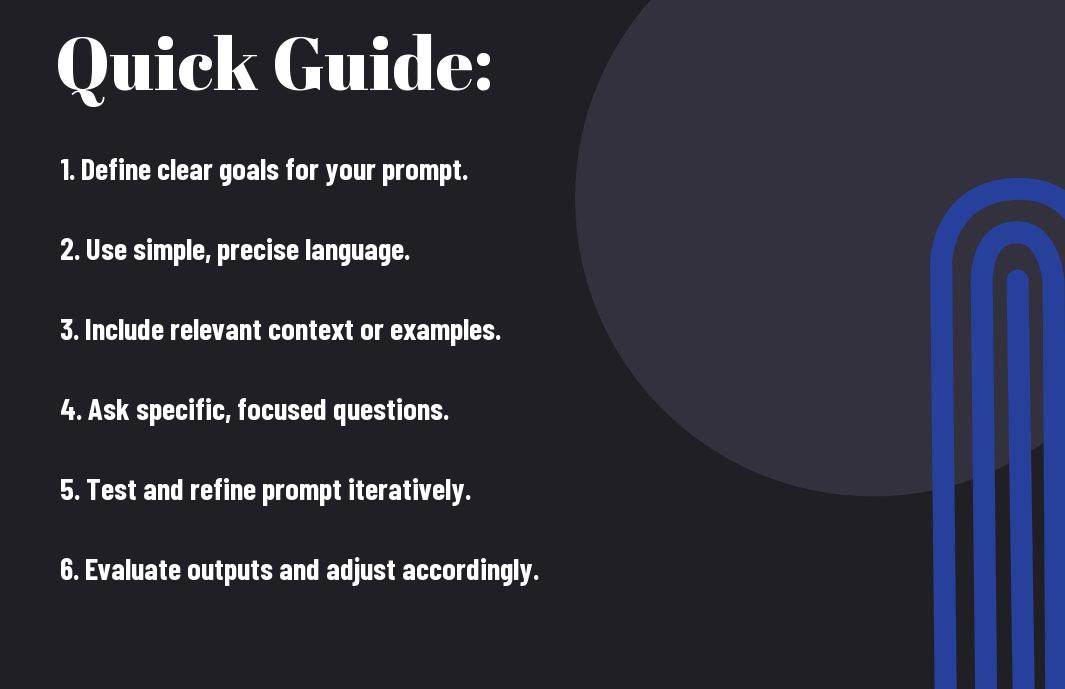

- Effective prompts should be clear and specific to guide the language model towards the desired output.

- Incorporating context and examples within the prompts can enhance the quality and relevance of the generated responses.

- Iterative testing and refinement of prompts are important for improving results and achieving more precise content generation.

Crafting Your Intent: Defining Clear Goals

Establishing a clear intent is the backbone of effective prompt design. Your objectives should guide every aspect of your prompt, ensuring that the language model understands your desired outcomes. Whether you aim to generate creative writing, provide information, or solve a problem, identifying your primary goals helps streamline the interaction. A well-defined intent also reduces ambiguity, leading to more relevant and accurate responses from the model.

The Power of Purposeful Prompting

Purposeful prompting not only sets the tone for the interaction but also aligns the model’s capabilities with your expectations. By clarifying your intent, you enable the model to deliver tailored responses that better meet your needs. For example, if you seek a summary of complex concepts, asking for “a brief summary of artificial intelligence applications” results in a focused output, rather than a general discussion.

Translating Your Objectives into Effective Language

Translating your objectives into concise and clear language is crucial in guiding the model effectively. The phrasing you choose directly impacts how well the model comprehends your intent. Avoid ambiguous terms; instead, use straightforward language that reflects what you want to achieve. For instance, rather than saying “elaborate on benefits,” specify “list three benefits of using renewable energy.” This clarity leads to more precise and relevant outputs, enhancing the overall usability of the model.

To achieve effective language translation, consider employing specific action verbs and limiting the scope of your requests. Phrases like “analyze,” “compare,” or “describe” can help frame your prompt in a way that dictates the type of response you’re looking for. For example, instead of a vague request such as “information on climate change,” a refined prompt like “compare the effects of climate change on marine and terrestrial ecosystems” provides the model with a clear framework. This not only enhances the quality of the response but also ensures that the output aligns closely with your specific goals, thereby increasing the value of the interaction.

Keywords That Connect: Leveraging Language Nuances

Employing the right keywords can transform a prompt from vague to precise, allowing LLMs to generate more relevant and targeted content. Analyzing the subtleties of language nuances will help you refine your prompts, ensuring they resonate well with the model’s understanding of context and intent. Effective keywords should not only reflect the central theme of your inquiry but also incorporate emotional and sensory language that evokes specific reactions or insights from the model.

Understanding Contextual Vocabulary for LLMs

Contextual vocabulary plays a pivotal role in how language models interpret your prompts. Using words that align with your topic while being mindful of their connotations can enhance the contextual relevance of the output. For example, instead of the word “car,” using “electric vehicle” can lead to more insightful discussions about sustainability and technology. Selecting terms that are not only fitting but also culturally or historically resonant can yield richer interactions with the model.

The Role of Phrasing and Syntax in Prompt Efficiency

Effective phrasing and syntax significantly influence the efficiency of prompts. Constructing your request in a straightforward manner can minimize ambiguity, guiding the model toward your desired response. For instance, instead of saying “Tell me about dogs,” you might phrase it as “What are the top three breeds of dogs for active families?” This specificity helps the LLM investigate deeper into the topic and produce more targeted content. A study showed that properly structured prompts led to a 25% increase in relevant responses, demonstrating the impact of concise and accurate syntax.

Adopting particular syntactical structures can streamline communication with LLMs. Starting your prompts with action-oriented phrases, such as “List,” “Explain,” or “Describe,” gives the model clear instructions on what you seek. Avoiding overly complex sentences can also prevent confusion, ensuring that the LLM devotes less time to deciphering your intent and more time generating valuable content. For instance, “Outline the benefits of meditation for stress relief” yields better results than a convoluted, multi-part question. By honing your phrasing, you can significantly enhance your interaction quality and the relevance of the outputs.

Iteration As Art: The Refinement Process

The process of refining your prompts is where the true artistry lies. Each iteration allows you to assess how well the model responds to your input and gives you the opportunity to make necessary adjustments. You may start with a rough idea and, through systematic testing and feedback, create a prompt that evokes richer, more relevant responses. Utilize resources like A Guide to Crafting Effective Prompts for Diverse Applications to understand diverse approaches to prompt refinement.

Techniques for Prompt Testing and Evaluation

Testing your prompts involves several techniques such as A/B testing, where you compare different versions of prompts to see which yields better results. Also, consider using structured templates to produce consistent results across various scenarios. Analyzing performance metrics like response relevancy and coherence can guide you in making adjustments to refine your approach.

Feedback Mechanisms: Learning from LLM Responses

Analyzing LLM responses offers valuable insight into the effectiveness of your prompts. By systematically collecting and assessing the outputs, you can identify patterns indicating the model’s understanding and areas needing improvement. This feedback loop enables you to fine-tune your prompts to elicit clearer, more aligned responses.

Employing effective feedback mechanisms involves not just reading outputs but also categorizing them based on quality metrics. Evaluate whether responses are relevant, coherent, or creative. Engage with your results actively; for example, if a prompt yields an unexpected or irrelevant output, dissect the structure of your input. Identify specific words or phrases that may trigger misinterpretations. This iterative learning process fosters a deeper understanding of how the language model interprets context and nuance, ultimately leading you to more successful prompt creations.

Audience Awareness: Tailoring Prompts for Desired Outcomes

Understanding your audience is paramount to crafting effective prompts. By aligning your prompts with the audience’s expectations and knowledge level, you set the stage for optimal responses from the language model. Whether it’s for academic purposes, casual communication, or business needs, tailoring your approach enhances engagement and relevancy, leading to significantly improved outcomes.

Identifying User Needs and Expectations

Begin by carefully assessing what your audience seeks from the output. Consider factors such as their familiarity with the topic, their specific information requirements, and what problems you aim to address. For instance, a prompt targeting experienced professionals in a field should be much more technical than one aimed at laypersons. Engaging your audience effectively starts with understanding their unique context.

Customizing Tone and Style for Engagement

Adjusting the tone and style of your prompts can significantly impact engagement levels. A conversational approach may resonate well for casual readers, while a formal tone might be more appropriate for academic audiences. For example, a prompt asking for a budget report should maintain professionalism, while one requesting creative ideas for a birthday party can be lighthearted and playful. This alignment with audience expectations fosters deeper connections and better responses.

When customizing tone and style, it’s helpful to consider the medium through which your audience engages with the text. Social media content thrives on a relaxed and relatable tone, while technical documentation benefits from clarity and precision. Use adjectives and language that align with your audience’s experience. Involving humor in a startup pitch could make your proposal memorable, while using straightforward language in a user manual ensures clarity. Ultimately, the right tone influences not just comprehension but also the likelihood of your audience taking the desired action.

Beyond the Basics: Advanced Prompt Techniques

Effective prompting extends beyond simple requests. You’ll discover advanced techniques that help you extract more precise and valuable responses from LLMs. These techniques bolster your ability to engage with the model, ensuring your interactions yield insightful outcomes. Here are some strategies to enhance your prompting capabilities:

- Incorporate specific contexts for tailored responses.

- Utilize hierarchical prompting for structured information.

- Leverage role play to simulate real-world scenarios.

- Experiment with emotional tones to influence responses.

| Technique | Purpose |

|---|---|

| Contextual Prompting | Enhances relevance and specificity. |

| Conditional Framing | Encourages creative and varied responses. |

| Feedback Loops | Improves response quality and understanding. |

Incorporating Constraints for Enhanced Control

Adding constraints to your prompts allows for greater precision in the model’s output. By specifying parameters—such as word count, tone, or format—you can guide the LLM towards the exact type of response you seek. For instance, instructing the model to provide a response in bullet points or limit the response to under 50 words can significantly sharpen its focus and relevance.

Experimenting with Multi-Turn Interactions

Engaging in multi-turn interactions can enrich your experience by developing a conversational flow with the LLM. Through a series of back-and-forth exchanges, you can clarify, refine, and explore complex ideas in detail. This iterative dialogue not only yields more refined outputs but encourages the model to maintain context, creating a more coherent and relatable framework for discussion.

Expanding on multi-turn interactions opens doors to nuanced discussions. Consider starting with an open-ended question and then following up with clarifying inquiries based on the initial response. For example, after receiving a general answer, you could ask the model to elaborate on specific points or provide examples, facilitating deeper understanding. This technique fosters a collaborative dynamic, transforming the interaction into a rich, engaging dialogue that builds on previous exchanges, enriching both your learning and the resulting insights.

Summing up

With these considerations, you can effectively design prompts that optimize your interactions with LLMs. By applying the principles outlined in this guide, you will enhance your ability to elicit meaningful responses tailored to your needs. To investigate deeper into this subject, explore the Prompt Engineering Techniques for LLMs and further expand your expertise in this area.

FAQ

Q: What are the key components of an effective prompt for LLMs?

A: An effective prompt typically includes clarity, context, and a specific request. Clarity ensures that the language used is straightforward, avoiding vague terms that could lead to misinterpretation. Providing context helps the LLM understand the background or situation related to the prompt, which can guide its responses. Lastly, being specific in what you are asking for—whether it’s an explanation, a story, or a comparison—can significantly enhance the relevance and accuracy of the output generated by the model.

Q: How can I test and refine my prompts to improve the responses from LLMs?

A: Testing and refining prompts can be approached through iterative experimentation. Start by creating a variety of prompts around the same topic and assessing the responses generated. Analyze which prompts yield the most informative or relevant answers. You may also want to tweak language, format, or structure based on the outputs you receive. Consider keeping track of the performance of different prompts to identify patterns or specific phrases that produce better quality responses. Iteration is key, so don’t hesitate to adjust and ask multiple versions until you find the most effective formulation.

Q: Are there common pitfalls to avoid when designing prompts for LLMs?

A: Yes, there are several common pitfalls to watch out for. One is overly complicated language that may confuse the model. Using jargon or ambiguous terms can lead to inaccurate outputs. Another pitfall is writing prompts that are too broad, which may result in generic responses rather than detailed insights. Additionally, failing to provide sufficient context can limit the model’s ability to give a relevant answer. It’s crucial to avoid leading questions that might bias the response or assume specific knowledge that the model may not have. Keeping prompts straightforward and focused will generally yield better results.